Introduction

Are you interested in running Large Language Models (LLMs) on your personal computer? If so, Ollama is an excellent choice! Unlike closed-source models such as ChatGPT or Claude, Ollama provides an open-source alternative that lets you develop AI applications without any cost. Plus, if you’re concerned about privacy and want to keep your data entirely on your system, Ollama is the perfect solution.

In this guide, we’ll walk you through the simple steps to download and install Ollama on your system, making it easy for you to explore the world of LLMs from the comfort of your own device.

Step 1: Download Ollama

The first step is to get Ollama installed on your local machine. It supports all major operating systems, including Windows, macOS, and Linux. To begin, head over to the official Ollama website and navigate to the downloads section.

Installation Process for Different Operating Systems

Windows Installation

- Download the compatible executable file from the official website.

- Run the installer and follow the on-screen instructions.

macOS Installation

- Download the Ollama.app file.

- Unzip the file and move it into the Applications folder.

Linux Installation

For Linux users, the installation process is straightforward. Simply open the terminal and execute the following command:

curl -fsSL https://ollama.com/install.sh | sh

Once the installation is complete, you’ll have Ollama set up on your system, ready to pull and run various LLMs.

Step 2: Download an LLM Model

Now that Ollama is installed, the next step is to pull an LLM model based on your requirements. Ollama supports multiple models, each tailored for different tasks. You can explore the available models on the Models section of the official website.

How to Pull a Model

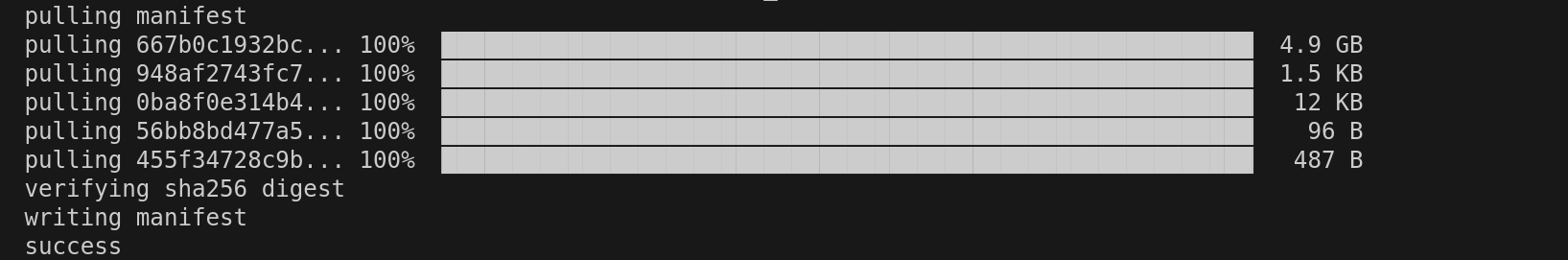

For this guide, we’ll use the Llama 3.1 model. To download it, run the following command in your terminal:

ollama pull llama3.1

The model size is approximately 4.9GB so depending on your internet speed, the download process may take a few minutes. Once the download is complete, you’ll be ready to run the model on your local system.

Step 3: Run the Model

After successfully downloading the model, you can start using it by running the following command:

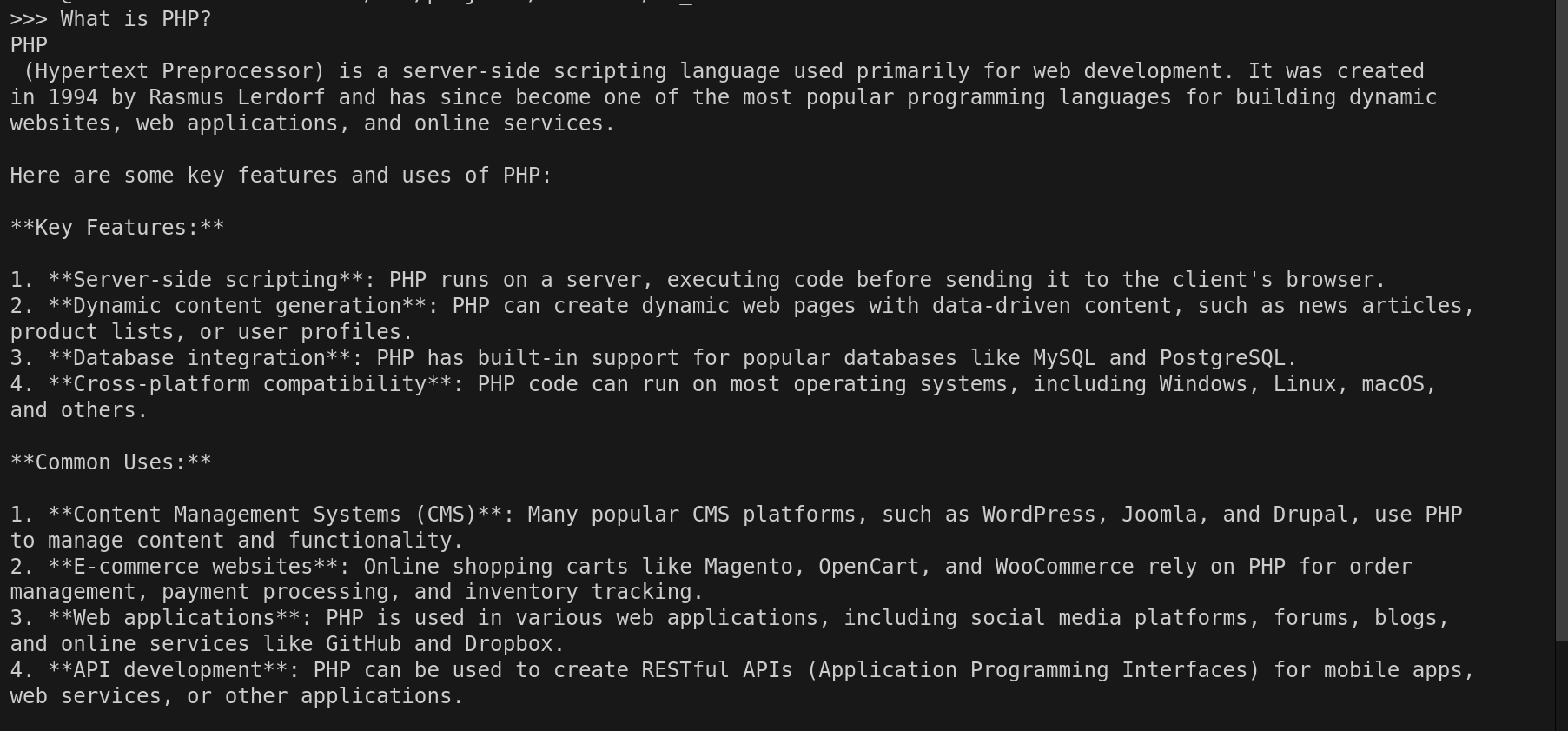

ollama run llama3.1

This command will launch an interactive terminal where you can interact with Llama 3.1, much like ChatGPT. Simply type your queries, and the model will generate intelligent responses in real time.

For example, let’s ask the model: What is PHP?

The response should be well-structured and informative, showcasing the model’s capabilities.

Conclusion

Ollama is a powerful open-source LLM framework that enables you to run AI models directly on your computer, ensuring complete control over your data and providing an excellent platform for learning and experimentation. Whether you’re an AI enthusiast, a developer, or a student, Ollama is an excellent tool to explore the vast potential of Large Language Models.

Start your journey with Ollama today and unlock the world of AI, all from your local machine!

By following this guide, you can seamlessly install and run Ollama on your system. If you found this tutorial helpful, feel free to share it with others who might be interested in exploring LLMs!